Due to rapid changes in artificial intelligence (AI), organizations rely increasingly on advanced operations frameworks to manage complex AI systems. A separate category, called LLMOps (Large Language Model Operations), developed in response to operationalizing large language models (LLMs) such as GPT, BERT, and LLaMA. LLMs are quickly being recognized as valuable assets in supporting applications that power chatbots, content creation, and decisioning systems; thus, the security, scalability, and efficiency of LLMs is an increasing focus. Multi-Agent AI is an exciting and new advancement for LLMOps that utilizes autonomous AI agents to support operations through simplification, security, and performance enhancement. While the remainder of this blog post focuses on why Multi-Agent AI is the next phase of secure LLMOps, we have also investigated integrations with DevOps technologies, CI/CD (Continuous Integration / Continuous Delivery) pipelines utilizing ArgoCD, log monitoring systems, and other relevant areas, like MLOps, AIOps, and DevSecOps.

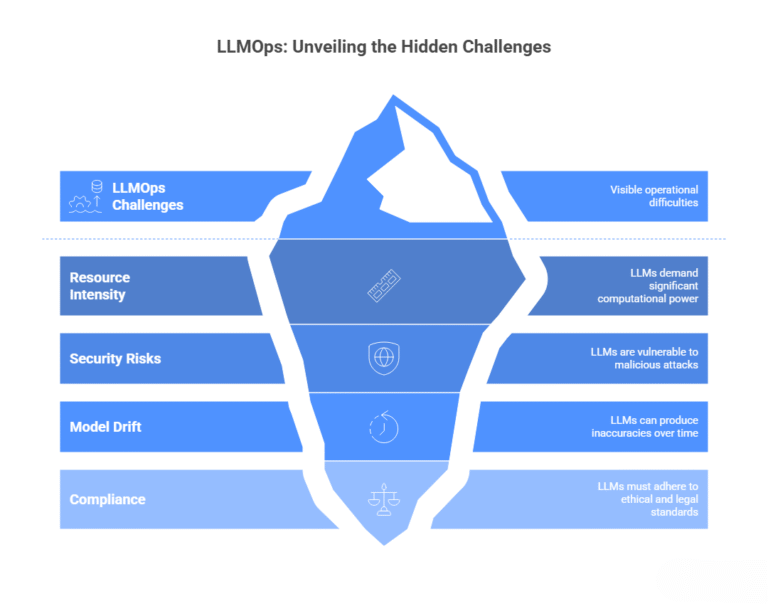

LLMOps is a specialization of MLOps which serves the operational requirements of large language models. While some constraints and principles of traditional machine learning still apply, large language models require vast computational resources, interrelated and complex prompt engineering, and ongoing monitoring of performance and ethical output risks. LLMOps accounts for data prep, model tuning, deployment, monitoring, and governance, addressing challenges such as:

These challenges necessitate a robust operational framework, and Multi-Agent AI is uniquely positioned to address them by distributing tasks across specialized AI agents, each handling specific aspects of the LLMOps lifecycle.

Multi-Agent AI is characterized by utilizing multiple autonomous AI agents working together to accomplish complex tasks. Each agent is designed to perform a specific role (e.g., data preprocessing, model monitoring, etc.) and tracks the process in real time. In LLMOps, Multi-Agent AI promotes efficiency by automating mundane tasks, improving decision-making, and securing safety through supervision. Multi-Agent AI is not simply about individual agents working interchangeably, but rather about the use of collective intelligence to speed up processes to work smarter and safer than a single agent could.

Multi-Agent AI can work seamlessly with DevOps AI tools to automate challenges in key LLMOps pipelines. For example, agents can automate the time-consuming processes of data ingestion, preprocessing, and model deployment, minimizing manual intervention. GitHub Copilot (optimizing code generation) and CircleCI (optimizing CI/CD pipelines) are examples of devops AI tools that leverage AI to promote faster and error-free deployments. Similarly, in LLMOps, agents can automate prompt optimization, confirming that LLMs will produce the accurate outputs that organizations seek while minimizing resource utilization. This form of automation presents itself in a manner that mirrors existing DevOps technologies, allowing organizations to optimally grow LLM deployments.

Continuous Integration and Continuous Deployment (CI/CD) are critical for deploying LLMs at scale. ArgoCD, a DevOps technology, facilitates GitOps-driven CI/CD by managing infrastructure as code. Multi-Agent AI enhances CI/CD with ArgoCD by assigning agents to monitor code repositories, validate configurations, and trigger deployments. For example, an agent can detect syntax errors in LLM configuration files, while another ensures compliance with security policies before deployment. This reduces errors and accelerates the deployment cycle, making LLMOps more agile and reliable.

Log monitoring systems are crucial for monitoring LLM performance and spotting anomalies. Multi-Agent AI offers these systems the unique capability to send agents into the monitoring scene to analyze logs in real time, correlate incidents, and identify root causes. Systems like Datadog and Prometheus can leverage AI-powered analytics that allow agents to detect things such a model drift and normative latency. An agent evaluating the outputs of an LLM may see toxic or biased responses and automatically initiate retraining workflows. Together, these capabilities allow LLMs to be proactively managed to maintain high reliability, safety, security, and ethical integrity for production usage.

The discussion about DevOps vs. DevSecOps emphasizes the importance of building software with security that is baked into the development lifecycle. DevSecOps includes security practices as a part of the DevOps process to ensure security vulnerabilities are patched at the beginning of the development cycle. In LLMOps, Multi-Agent AI is utilized to augment DevSecOps by deploying agents to cover the security-specific functions of the overall dev pipeline by having the agents scan for and annotate known prompt injection vulnerabilities, or perhaps encrypt ciertos pieces of sensitive data. For instance, an agent can be set up to perform commands, such as using Snyk to scan LLM codebases for known vulnerabilities, while another has set themselves up to leverage the Open Policy Agent (OPA) for governance around data processing and GDPR compliance. In turn, this leads to a trustworthy and secure LLM that handles all common attack vectors, including an adversarial attack or data breach.

Modernizing applications is key to incorporating LLMs into enterprise environments. Multi-Agent AI can facilitate this process by getting everything in place for the modernization, ranging from legacy code refactoring to deploying the LLM-enabled applications in the cloud-native stack, such as Kubernetes. Agents can automate application testing, help optimize resource allocation in the cloud infrastructure and engage application modernization compatibility checks with contemporary platforms. One scenario, for example, could involve an agent employing Retrieval-Augmented Generation (RAG) to augment the LLM output with live data so the applicable environments become more real-time responsive and relevant.

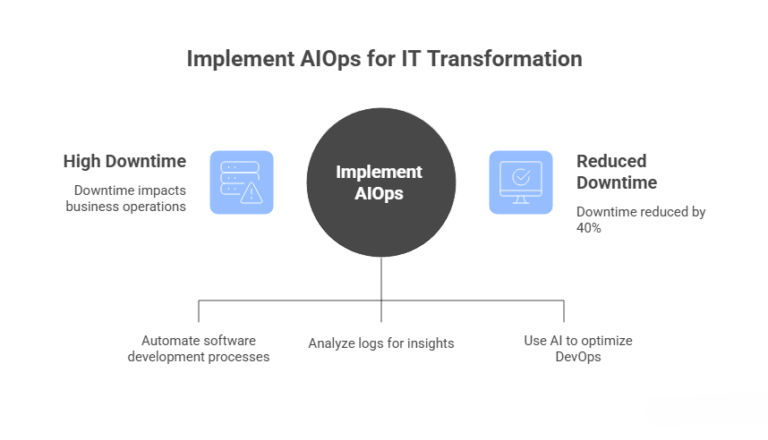

MLOps is concerned with managing traditional machine learning models. AIOps leverages AI to improve IT operations. LLMOps builds on these two frameworks while Multi-Agent AI facilitates the ability for them all to work together. For instance, MLOps agents can take responsibility for model training, AIOps agents can monitor the infrastructure, and LLMOps agents can optimize prompts and fine-tune an LLM. The ability to have this unified approach, generally known as XOps hardens the concept of holistic management of AI systems and increases the efficiency of personnel while reducing siloing.

DataOps simplifies data pipelines, resulting in high-quality datasets for training LLMs. Multi-Agent AI takes DataOps a step further by automating the monitoring and ingestion of data, including transforming data into vector databases using tools like Nexla. Likewise, FinOps provides oversight of budget, while agents can take care of monitoring cloud usage to avoid overspending on GPU clusters. Together, Multi-Agent AI, DataOps, and FinOps integrated into LLMOps ensure efficiency through both data and budget.

To maximize the benefits of Multi-Agent AI in LLMOps, organizations should follow these best practices:

DevSecCops.ai offers a full-featured AI DevOps platform that can help organizations incorporate Multi-Agent AI for LLMOps quickly. Their capabilities, to include automated threat detection, real-time monitoring, and predictive analytics, secure LLM deployment. They have expertise in app modernization and DevSecOps integration to help organizations build robust, scalable AI systems. Organizations can partner with a DevOps service firm like DevSecOps.ai to leverage Multi-Agent AI and access the promised value of LLMOps while driving innovation and maintaining security and compliance in an AI world.