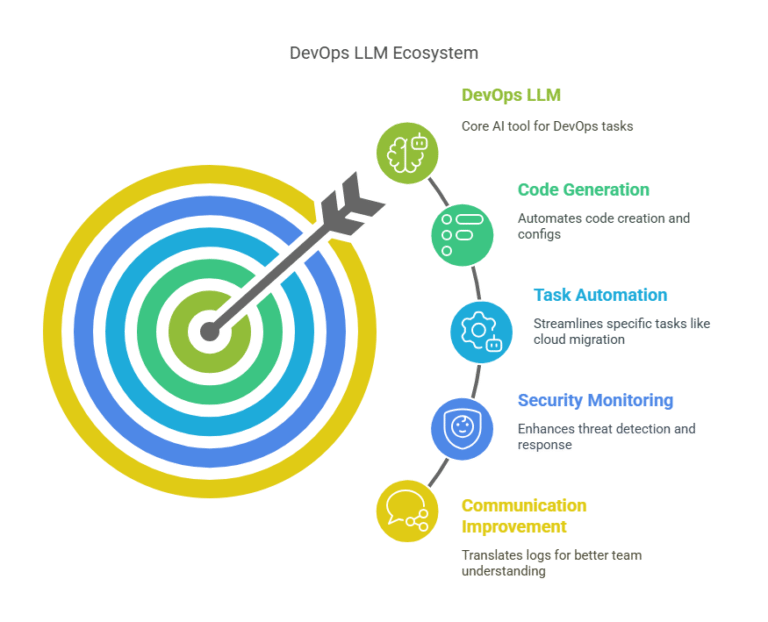

Hey, ever wondered how AI is shaking up the DevOps world? DevOps LLM refers to Large Language Models that serve as intelligent assistants for DevOps teams. These AI powerhouses are automating everything from code writing to security checks, making software delivery faster and smoother. With DevOps GenAI, digital platform AI, cloud migration services, and security monitoring systems in the mix, DevOps LLM is changing the game. Let’s dive into what it does, why it’s awesome, where it gets tricky, and how it’s working in the real world—all in a way that feels like a chat over chai. Ready?

Picture DevOps LLM as your DevOps team’s new BFF. It’s an AI tool—think GPT-4 or AWS Bedrock—that understands plain English and churns out code, configs, or even pipeline fixes like a pro. Instead of you sweating over a Kubernetes YAML file, you just tell the LLM, “Hey, set up a cluster for me,” and bam! It’s done in minutes. That’s the magic of DevOps LLM.

It’s not just about coding. LLMs can, for instance, translate technical logs into readable summaries to improve team communication. Need to move your app to the cloud?LLMs streamline specific tasks (e.g., generating Terraform scripts) within a broader human-led process. Worried about hackers? Security monitoring systems use LLMs to spot threats faster than you can say “bug.” Terms like AI-driven DevOps or LLMOps (fancy for LLM operations) show how it’s revolutionizing workflows. Cool, right?

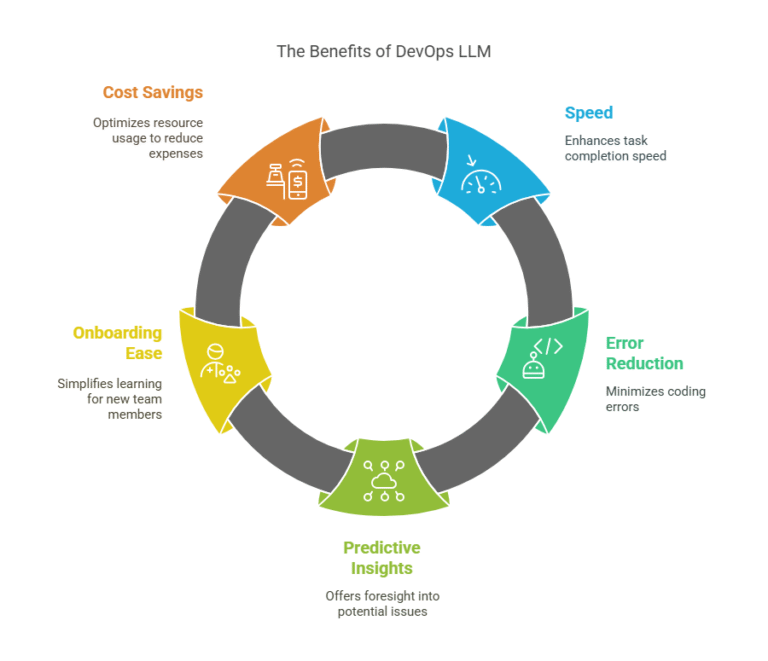

Okay, let’s break down why DevOps LLM is the hero your team needs.

Imagine you’re cooking biryani, but instead of chopping onions for hours, a robot does it in seconds. That’s DevOps LLM for tasks like writing Terraform scripts or fixing CI/CD pipelines. A fintech company in 2025 used an LLM to slash ArgoCD rollback time from 30 minutes to 2—talk about fast! For cloud migration services, this speed means you’re up and running on AWS or Azure in no time. Workflow automation and AI-powered pipelines are the buzzwords here.

Writing code for 50 microservices is like juggling flaming torches—one slip, and it’s chaos. DevOps LLM generates 90% of that code perfectly, so you just tweak the rest. For security monitoring systems, it ensures your configs are tight, meeting standards like SOC 2 without headaches. Think of it as a spell-checker for your infrastructure—semantic code generation keeps errors at bay.

LLMs don’t just work; they predict. By digging through old logs, a DevOps LLM can warn you about pipeline crashes or security holes before they happen. A security monitoring system with LLMs might spot a dodgy S3 bucket setup, saving you from a data breach. Predictive DevOps and anomaly detection AI make it feel like you’ve got a sixth sense.

Starting in DevOps can feel like learning rocket science. DevOps GenAI tools like LLMs explain complex stuff—like a GitLab pipeline—in plain English, so new hires get up to speed fast. For digital platform AI, this means everyone from coders to managers understands what’s going on. It’s like having a patient tutor 24/7. AI-assisted onboarding is the key here.

Manual work costs time and money. DevOps LLM automates tasks, so your team focuses on building cool features instead of babysitting servers. In cloud migration services, LLMs set up auto-scaling to cut cloud bills by only using what you need. Cloud cost optimization and AI-driven resource management are your wallet’s new friends.

Alright, DevOps LLM isn’t all rainbows. Here’s where it can trip you up—and how to dodge those traps.

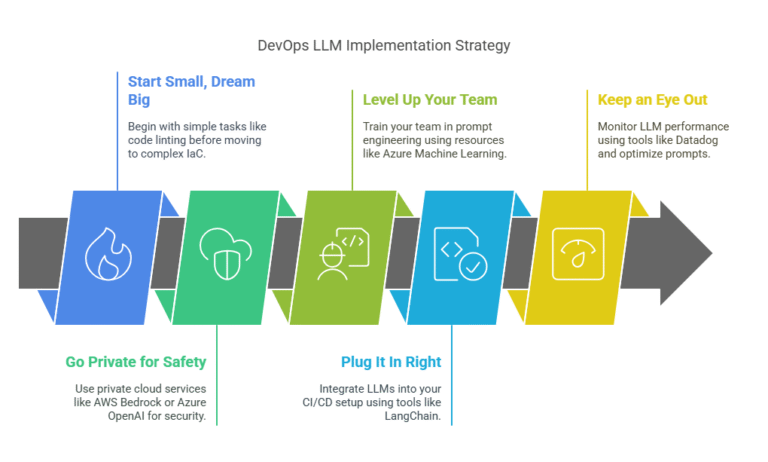

Hooking up an LLM to your Jenkins or Kubernetes setup is like teaching your grandma to use WhatsApp—possible, but it takes effort. You need solid prompts to get the right output, and that’s tough without AI know-how. Tools like Kubiya.ai help, but it’s still a puzzle. LLM integration challenges and DevOps tool compatibility are real hurdles.

Feed an LLM the wrong data, and it’s like giving your diary to a stranger. Public LLMs like ChatGPT can leak sensitive info, especially for security monitoring systems. Stick to private models like AWS Bedrock to keep things locked down. DevOps data privacy and secure AI workflows are non-negotiable.

A 2025 survey said 86% of DevOps folks double-check LLM outputs because they’re not AI wizards yet. Learning to write good prompts or verify code is a must, especially for cloud migration services where one typo can tank your migration. AI skill development and prompt engineering are where you need to level up.

Running LLMs for real-time tasks—like scanning logs in a security monitoring system—eats up serious computing power. That can spike your cloud costs if you’re not careful. AI scalability issues and DevOps compute costs mean you’ve gotta plan smart.

LLMs need to follow laws like GDPR. If your DevOps LLM is auditing a cloud setup, it better not churn out biased or non-compliant stuff. Governance is key to avoid ethical messes. AI compliance DevOps and ethical AI usage keep you on the right side of the law.

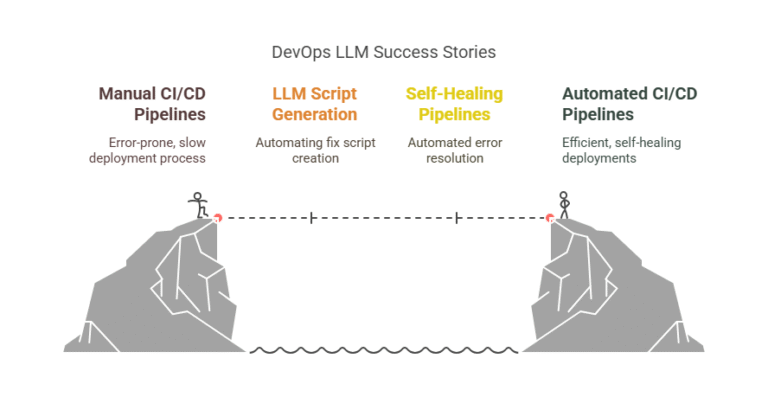

Let’s see how DevOps LLM is making waves out there.

A fintech company had a messy ArgoCD setup—failed deployments were their daily drama. They brought in DevOps LLM to auto-generate fix scripts, cutting setup time by 70%. With DevOps GenAI, their pipelines started healing themselves, like a superhero with a first-aid kit. CI/CD automation AI and self-healing DevOps were the stars here.

A financial firm used a security monitoring system powered by DevOps LLM on AWS. It caught sneaky API calls and phishing attempts in seconds, acting like a digital guard dog. Platforms like DevSecCops.ai make these setups a breeze. AI-driven security and real-time threat detection saved the day.

A retail giant was moving to a hybrid cloud and drowning in configs. DevOps LLM whipped up Terraform scripts for AWS EC2 instances, slashing errors by 90%. This made their cloud migration services smooth as butter. AI-powered cloud migration and IaC automation were the MVPs.

An e-commerce site had logs piling up like laundry. Their DevOps LLM analyzed them in real time, spotting error spikes and explaining issues in plain English. Root cause analysis time dropped by 50%. AI log analysis and DevOps anomaly detection made life easier.

A healthcare company used digital platform AI with LLMs to turn cryptic Jira tickets into clear business goals, getting tech and non-tech teams on the same page. The LLM also churned out CI/CD configs, making collaboration a breeze. AI-driven collaboration and cross-functional DevOps sealed the deal.

Wanna make DevOps LLM work for you? Here’s the playbook:

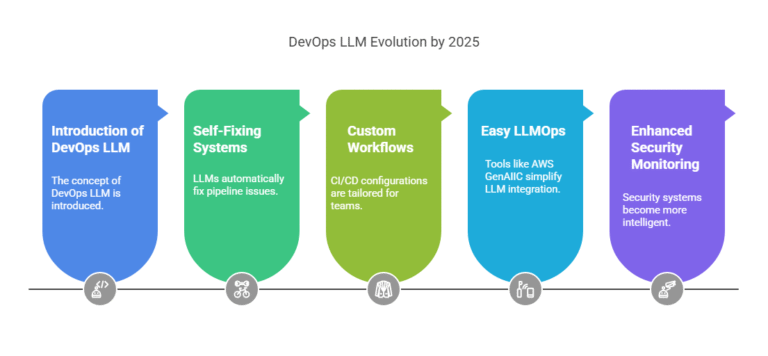

By 2025, DevOps LLM is gonna be next-level:

DevOps GenAI is paving the way, and security monitoring systems will get smarter, making DevOps LLM a must-have.

DevOps LLM is like a turbo boost for your DevOps game, speeding up pipelines, locking down security monitoring systems, and making cloud migration services a walk in the park. Sure, there are bumps—like skill gaps or data security—but the real-world wins in fintech, e-commerce, and healthcare show it’s worth it. With the right playbook and tools like DevSecCops.ai, you can ride the DevOps LLM wave to faster, safer software delivery.

Swing by DevSecCops.ai to check out resources and level up your DevOps with LLMs in 2025!